Last updated on March 16th, 2022 at 01:56 am

Use screaming frog to discover, crawl, analyze and export your Google Search Console URLs will help you uncover numerous types of indexing and technical issues on your website. Skip to the FAQs at the bottom of the page for specific examples.

The Configuration Settings

- Launch Screaming Frog

- Set default configuration

- Go to menu: File > Configuration > Clear default Configuration

- Update crawl Configuration

- Go to menu: Configuration > Spider

- On the “Crawl” tab uncheck all of the “Resource Links” “Page Links”, “Crawl Behavior” and “XML Sitemaps” section checkboxes, then click “OK”

- Mode > select ‘list’

- Go to Menu: Configuration > API Access > select ‘Google Search Console’

- Connect to appropriate user account

- Select appropriate property

- Click the Date Range tab and select date range

- Skip the Dimension Filter tab

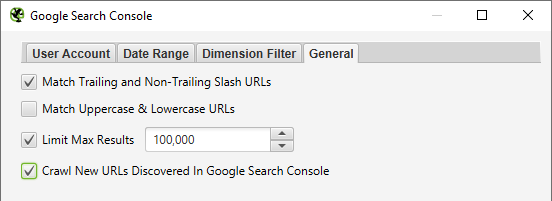

- Click the General tab and check the ‘Crawl New URLs Discovered In Google Search Console’ check box then click ‘OK’

- Find more information on connecting Screaming Frog to the built-in APIs at the Screaming Frog User Guide.

Running the Crawl

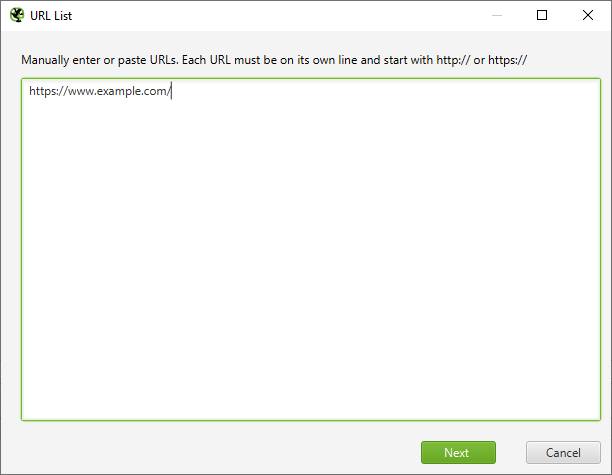

- Click ‘Upload’ and select ‘Enter Manually…’

- Place just the homepage URL of the web property, click ‘Next’ then click ‘OK’

- After the homepage is crawled, wait… the pages present in search console will start importing into Screamingfrog, which you can export in whichever format you choose.

Frequently Asked Questions

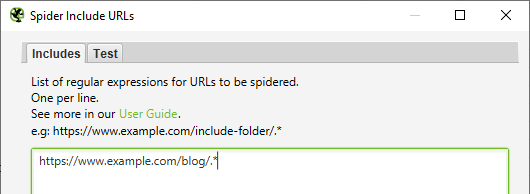

Yes, you can use the include configuration to only include sections of the website you want to export from GSC. You may also use the exclude configuration to exclude specific sections of your website. Go to Menu: Configuration > Include

Then put in the directory to include followed by a dot and asterisk.

Example of how to include pages from a blog only:

You will be able to detect status and error code issues, problems with noindex tag directives, canonical url discrepancies and many other technical SEO items that may be causing indexing or user experience problems on your website.

GSC has a limit of the number of URLs you can export. I am not sure if the API has a limit, but I have not hit it yet.

Yes, of course. As long as you have verified access to the web property you want to discover you can do this.

Yep, same process but connect to the GA API instead.

dear eric

this is really a valuable source and has made my day.

your solution offers really great approach to detect errors & issues. any idea from you experience about how sensible/often one should perform the crawl.

thank you very much for sharing – great work

michael

Hi Michael, thanks you for the compliment. I think a standard site should probably do this monthly or if you do regular technical audits then make this part of it. You can also learn a lot from running the Page Inventory Crawl with the GSC API connected so you can review orphan pages and indexable pages that are not receiving any impressions. That will help you find SEO opportunities for specific pages.

~Eric

Dear Eric. I appreciate your detailed answer and your further suggestions. Thank you for that – I’ll check that! Kind regards – michael